A disclaimer as with all the experiments I do, do not take what I say as gospel - I dont have 20 years experience, this is what I see as I work through the implementation details of shaders and R3F/Threejs.

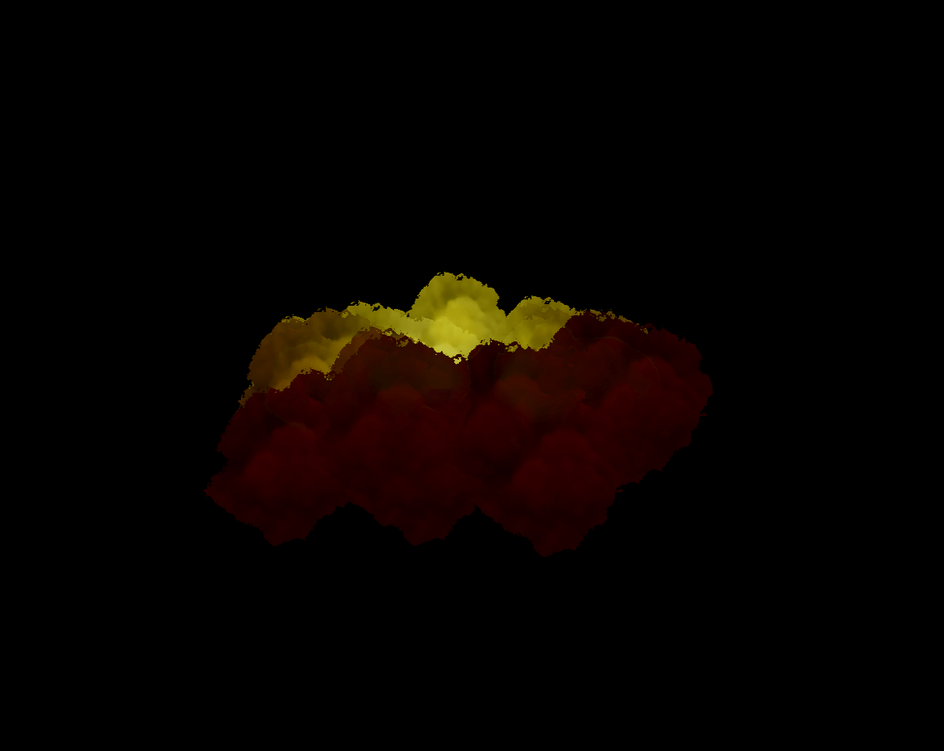

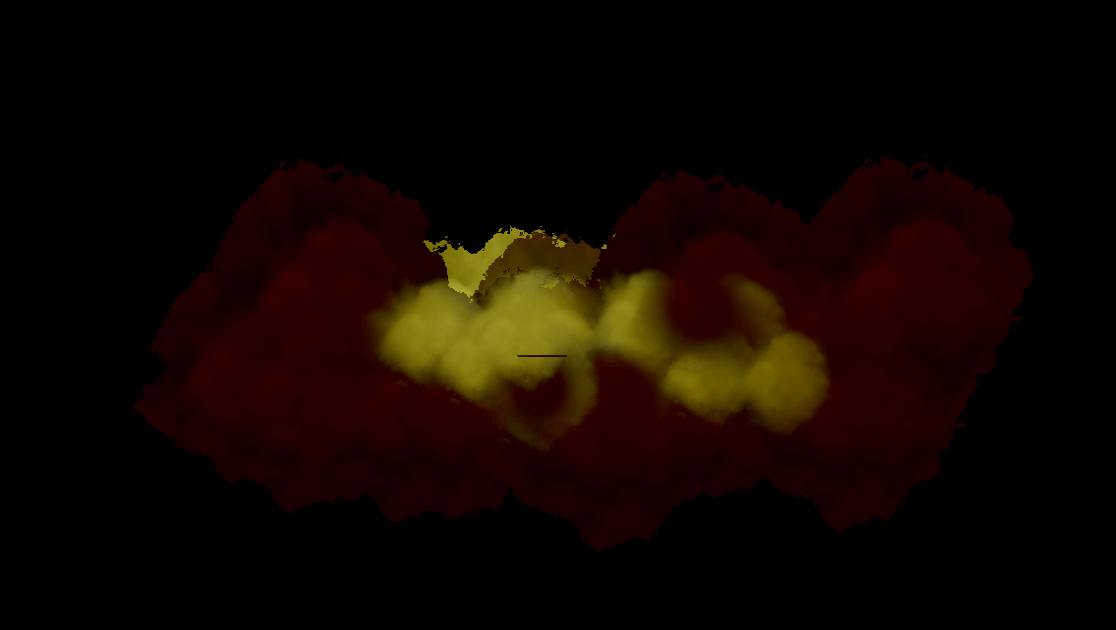

The transparency issue is an old one with webgl. The basic issue as I see it, is that when there is transparency and variation in foreground and background brightness, the brighter colours appear to shine through the foreground darker colours. This becomes an issue when there are brighter objects in the background obscured by darker objects infront. Look at the image below.

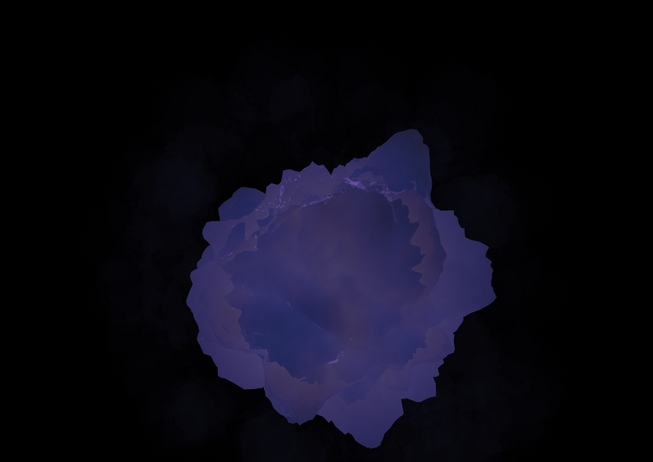

Well lets say we have a uniform colour with no lights. You would get something like this:

You can see absolutely no issues here and we have transparency and colours right? well the difference being that there is little variation in their color, the color is the same 1 unit and 2 units away from the camera - mainly because there are no lights causing variation in colours in the foreground and background.

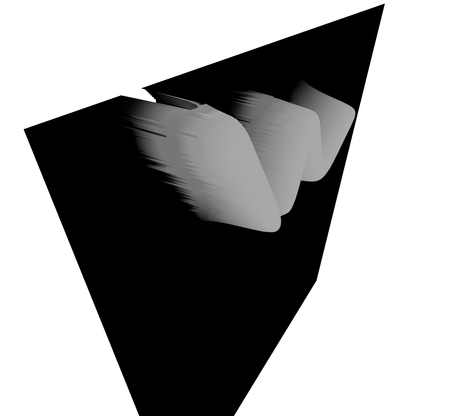

Below is an image where I have just done linear interpolation (a basic mix between two colours), the opaque render and transparent render (explained abit below):

If you have two planes with the same opacity but different colors (one lighter and one darker), the final color of a pixel will be a blend of the colors of both planes. The lighter color will contribute more to the final color because it allows more light to pass through, while the darker color will contribute less - a chatgpt line (could you tell 😂).

Lets just understand some maths behind this and it will become clearer, I say maths its just a very basic vector example:

(below is a couple of vec3’s as r/g/b or x/y/z in the range 0.0 - 1.0)

1vec3 black = vec3(0.0, 0.0, 0.0);23or/45vec3 white = vec3(1.0, 1.0, 1.0);

So if we class the float as data or color, there is more data and color in the white vec3 color, the numbers are bigger, and if you get the magnitude i.e. length of each, the magntiude of the whiter vec3 will be bigger. So webgl appears to take more from the brighter colors when darker colors obscure the brighter colors.

So although chatgpt is used here for a description we can easily validate the aspects of this and it does mathmatically make sense.

So if lighter colours contribute more, darker less and we have a light in the middle of the field of planes; this means when the light shines on a plane in the distance it will brighten it and therefore we get the image as shown above i.e. bright colors shining through the foreground darker ones. Not what we want!

So I have to give credit to cody for this as I am rubish at finding out the concepts or names of things, but there is a way to mitigate this affect and thats - order independent transparency.

The concept is simple:

- set everything to be more opaque i.e. less transparency

- this way we get solid colours and no light shine through artefact

- render this to a render target

- set everything to be transparent i.e. more see through and with the artefact

- render this to a render target

- finally mix these two textures in a postprocessing shader pass

- avoid / mitigate some what the lighter colours bleeding through the darker colours

The last point there is key, this isnt a golden bullet where it fixes the whole problem. It mitigates it and gives somewhat better results.

So enough of the chitchat, how do you actually implement this? (well one way of implementing it is as follows)

Scene Setup

So we have a pretty basic setup, a few planes all facing the camera scattered over a relatively small distance in three units.

1return(2 <group>3 <OrbitControls ref={controls} />4 <gridHelper />5 <ambientLight intensity={0.57} color="red" />6 <pointLight7 ref={pointLight}8 position={[0.0, 0.0, 0.0]}9 intensity={200}10 color="yellow"11 />12 <instancedMesh ref={meshRef} args={[null, null, NUMBER_OF_PARTICLES]}>13 <planeGeometry attach="geometry" args={[40, 40]} />14 <meshStandardMaterial15 attach="material"16 ref={matRef}17 side={THREE.DoubleSide}18 map={smoke}19 onBeforeCompile={OBC}20 blending={THREE.NormalBlending}21 />22 </instancedMesh>23 <EffectComposer ref={composerRef}>24 <AdditionEffect25 ref={addShaderPassRef}26 opaqueScreen={opaqueScreenTexture.texture}27 transparentScreen={transparentScreenTexture.texture}28 renderToScreen29 />30 </EffectComposer>31 </group>32)

Pretty standard stuff, the planes are represented through the instanceMesh and then orientated through this section of the useFrame updating them every frame:

1useFrame(({ clock, gl, camera: camA, scene }) => {2 if (matRef.current) {3 updatePlanes({4 ref: matRef.current,5 clock,6 });78 // other code ....9 }

which does this:

1const matrix = new THREE.Matrix4();2const transform = new THREE.Object3D();34const updatePlanes = useCallback(({ ref, clock }) => {5 if (ref) {6 const shader = ref.userData.shader;78 if (shader) {9 shader.uniforms.camQ = {10 value: quart.copy(camera.quaternion),11 };12 shader.uniforms.time = {13 value: clock.elapsedTime,14 };1516 for (let i = 0; i < NUMBER_OF_PARTICLES; i++) {17 // onscreen1819 // Grab the instance's mesh and the instance index20 meshRef.current.getMatrixAt(i, matrix);2122 // we decompose ie store the posititon/rotation/scale in a23 // Object3D (the base class of all Three objects).24 matrix.decompose(25 transform.position,26 transform.quaternion,27 transform.scale,28 );2930 // Apply the rotation of the camera to the Object3D31 transform.quaternion.copy(camera.quaternion);3233 // Emnsure this shows by updating the Matrix and we modify it manually34 transform.updateMatrix();3536 // Then set this modified matrix again on the instance Ref37 meshRef.current.setMatrixAt(i, transform.matrix);38 }3940 meshRef.current.instanceMatrix.needsUpdate = true;41 }42 }43}, []);

Ive left some comments above to show what we are doing. Hopefully self explanatory.

So we have planes rotated towards the camera but how can we use lights? well the best way to do this is to utilise the in built materials. How can we do this?

OnBeforeCompile as Ive used in examples before allows us to do this. We can inject shader code into the default inbuilt shaders that Three has provided.

We will use a MeshStandardMaterial for the instanced planes. This allows us to get quite realistic light effects/reactions.

The onBeforeCompile pattern goes as follows:

1const OBC = useCallback((shader) => {2 shader.uniforms = {3 ...shader.uniforms,4 ...customUniforms5 }67 shader.vertexShader = shader.vertexShader.replace(8 'something defined in the inbuilt shader' ,9 `something defined in the inbuilt shader +10 replacement code11 `12 )1314 shader.fragmentShader = shader.fragmentShader.replace(15 'something defined in the inbuilt shader' ,16 `something defined in the inbuilt shader +17 replacement code18 `19 )202122 matRef.current.userData.shader = shader23}, [])

We add any custom uniforms into the uniforms object as well as the original uniforms. Do not forget the original uniforms I have spent a few hours before debugging after forgetting to spread the original uniforms. All the pre-compiled shaders are just strings, so therefore we can find a string fragment and replace this with it’s self and then our custom code.

Save a reference of the shader in the userData object on the material, which is designed for any data we as the user need to store about the shader. This is following the official pattern by the way, nothing new here. This way we can do something along the lines of:

1if (matRef.current.userData?.shader) {2 matRef.current.userData.shader.uniforms.time.value = clock.elpasedTime3}

And as the shader is stored as a reference we can update the original uniforms object. Fortunately we dont need to update any material uniforms just the shaderPass uniforms. But I wanted to show you again how this is possible.

As you can see below, where are all the settings for the transparency?

1<instancedMesh ref={meshRef} args={[null, null, NUMBER_OF_PARTICLES]}>2 <planeGeometry attach="geometry" args={[40, 40]} />3 <meshStandardMaterial4 attach="material"5 ref={matRef}6 side={THREE.DoubleSide}7 map={smoke}8 onBeforeCompile={OBC}9 blending={THREE.NormalBlending}10 />11</instancedMesh>

We can do all the settings in the renderLoop every frame and osilate between transparent and opqaue settings. Giving us fine control over the effect.

RenderLoop, settings and renderTargets

The renderLoop is the place where the scene and all of its child objects get rendered onto our screen. This is how Ive always thought ot it - probably a gross under description of this and all of its duties..

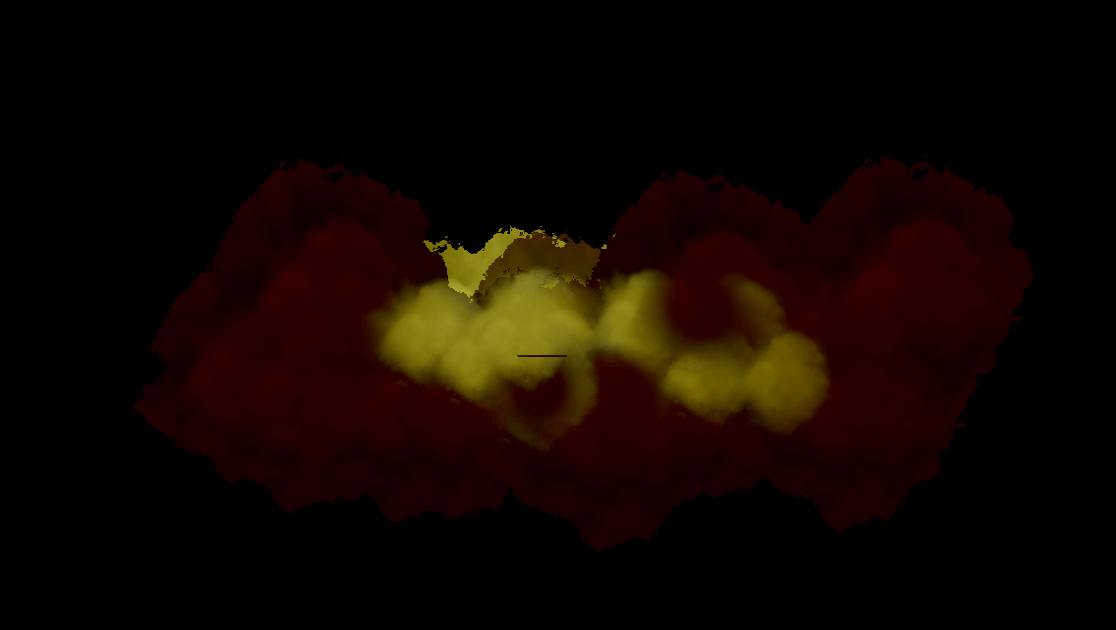

UseFrame can be used to hijack this renderLoop and give us fine control. We will draw the opaque version of the scene to a renderTarget and then draw a transparent version scene to a different renderTarget. This in very basic terms is like taking a couple of snapshots of the current render defined by the RT settings and resolution i.e. width/height of the viewport.

So what does this look like:

1useFrame(({ clock, gl, camera: camA, scene }) => {2 if (matRef.current) {3 updatePlanes({4 ref: matRef.current,5 clock,6 });7 }89 // Opaque / more dark render10 matRef.current.alphaToCoverage = true;1112 matRef.current.alphaTest = 0.3;13 matRef.current.depthWrite = true;14 matRef.current.transparent = false;15 matRef.current.opacity = 1.0;1617 gl.setRenderTarget(opaqueScreenTexture);18 gl.render(scene, camera);1920 if (addShaderPassRef?.current) {21 addShaderPassRef.current.uniforms.get("opaqueScreen").value =22 opaqueScreenTexture.texture;23 }24 // Opaque / more dark render252627 // transparent / more bright render28 matRef.current.alphaToCoverage = false;2930 matRef.current.alphaTest = 0.0;31 matRef.current.depthWrite = false;32 matRef.current.transparent = true;33 matRef.current.opacity = 0.4;34 gl.setRenderTarget(transparentScreenTexture);35 gl.render(scene, camera);3637 if (addShaderPassRef?.current) {38 addShaderPassRef.current.uniforms.get("transparentScreen").value =39 transparentScreenTexture.texture;40 }41 // transparent / more bright render42});

The opaque settings basically disable transparency and use the alphaTest which is a arbitary cut off of alpha. And I played around with alphaToCoverage on three builtin materials as suggested by the discord sever to play around with alphahashed etc.

The transparent settings are pretty basic and as per all of the github issues suggest you should typically disable the depthWrite when using the transparent property set to true.

And the overall mechanics are we set renderTargets and then render the scene to this renderTraget. And this particular technique requires 2 renders of the scene per frame. Depending on your scene you may or may not be able to allocate the resources to this overhead. It will require some trial and error.

But yea, render opaque, render transparent and then set the output color of the shaderPass to either the mixed opaque or mixed tranpsarent depending on the magnitude of the vec4 or to put it another clearer way: which rgba / vec4 has more color / data.

Custom shader Pass using the postprocessing library

So before you look at this go read the wiki from the postprocessing library it is full of implementation details on how to customize an effect or shader pass. Here’s the wiki.

1import React, { forwardRef, useMemo } from "react";2import { Uniform } from "three";3import { BlendFunction, Effect, EffectAttribute } from "postprocessing";4import * as THREE from "three";56const fragmentShader = `...`;78let _uParamOpaque;9let _uParamTransparent;1011// Effect implementation12class MyCustomEffectImpl extends Effect {13 constructor({ opaqueScreen = null, transparentScreen = null } = {}) {14 super("AdditionEffect", fragmentShader, {15 attributes: EffectAttribute.CONVOLUTION | EffectAttribute.DEPTH,16 uniforms: new Map([17 ["opaqueScreen", new Uniform(opaqueScreen)],18 ["transparentScreen", new Uniform(transparentScreen)],19 ]),20 });2122 _uParamOpaque = opaqueScreen;23 _uParamTransparent = transparentScreen;24 }2526 update(renderer, inputBuffer, deltaTime) {27 this.uniforms.get("opaqueScreen").value = _uParamOpaque;28 this.uniforms.get("transparentScreen").value = _uParamTransparent;29 }30}3132const AdditionEffect = forwardRef(33 ({ opaqueScreen, transparentScreen }, ref) => {34 const effect = useMemo(() => {35 return new MyCustomEffectImpl({ opaqueScreen, transparentScreen });36 }, [opaqueScreen, transparentScreen]);37 return <primitive ref={ref} object={effect} dispose={null} />;38 },39);4041export { AdditionEffect };

Theres not much to explain here its all ripped from the npm package wiki. Couple of things to note. To use depth you HAVE to set this:

1attributes: EffectAttribute.CONVOLUTION | EffectAttribute.DEPTH,

In this particular case we dont use it but I wanted to highlight it, otherwise the value I think is just 0 in the shader as there is no data sent through to the shaderPass. It is clear in this example that there is a strict way of laying out and defining uniforms, of which my second point is to show you how to set one of these uniforms, it DOES NOT follow the same pattern as normal for setting uniforms, it is slightly different syntax:

1addShaderPassRef.current.uniforms.get("transparentScreen").value =2 transparentScreenTexture.texture;

And you can see this is different as we have used a Map in the shaderPass and therefopre we have to do:

1.uniforms.get(...).value

Might not seem so obvious at first and cause confusion, worth a highlight.

So now we have the setup, shaderPass setup and renderLoop defined. So what about the shader?

The shaderPass and mixing the opaque and transparent textures

The shader is really really basic, let me explain.

1const fragmentShader = `2uniform sampler2D opaqueScreen;3uniform sampler2D transparentScreen;45void mainImage(const in vec4 inputColor, const in vec2 uv, const in float depth, out vec4 outputColor) {6 vec4 opaque = texture(opaqueScreen, uv);7 vec4 transparent = texture(transparentScreen, uv);89 float opaqueMagnitude = length(opaque);10 float transparentMagnitude = length(transparent);1112 if (transparentMagnitude >= opaqueMagnitude) {13 outputColor = mix(transparent, opaque, 0.99);14 } else {15 outputColor = mix(transparent, opaque, 0.6);16 }17}`

In some other project code in the shaderPass we defined the uniforms and in the useFrame we set the uniforms now its time to consume these uniforms.

To put it plainly the snapshots of the scene with each of the opaque and transparent settings on the plane material are in the renderTargets and we can grab the textures and pass them as uniforms like we have done. Now sample the textures like so:

1vec4 opaque = texture(opaqueScreen, uv);2vec4 transparent = texture(transparentScreen, uv);

Using the uv parameter of the builtin mainImage which comes with the postprocessing library.

Heres the interesting bit.

If we want the magnitude of a vector ie how far this vector will travel to get to the end destination, we can use the length function in glsl.

So we can use this in a similar context with colors which are also represented by vec3 and vec4’s (rgb or rgba) in glsl shaders. If we want to know how colourful a vector/color is we can also use glsl’s length function.

Great so we now know which one of the transparent or opaque textures are more colourful. Remember the start of this article, more colourful mixed with darker less colourful vectors, will result in a bias towards the more colourful/ more data / the more close you are to 1.0 in each of the vector channels (x/y/z or r/g/b).

Now we can distingish between the more colourful and the less colourful we can either just do a swap (which can and does cause arefacts using just the plain mix glsl function with 0.5 as the third parameter) or we can mix the two colors, favouring the opposite depending on which colour has a greater magnitude.

1// If the transparent color is brighter i.e. has more colour than the opaque2// then we can just mix these two colours with a large bias towards the3// opaque color.4if (transparentMagnitude >= opaqueMagnitude) {5 outputColor = mix(transparent, opaque, 0.99);6} else {7 // Otherwise if the transparent has less color than the opaque then8 // we still have a bias towards the opaque, but 39% less bias.9 outputColor = mix(transparent, opaque, 0.6);10}

But why would the transparentMagnitude ever be less than the opaque Magnitude?

If we have the camera at such an angle as the closer darker planes do not obscure the lit planes in the background and where the further away planes are lit up by a light and closer planes are darker / further away from the light.

Then what we are saying in this case, is when the further away lighter planes are not visually obstructed by the darker planes, ie both rendertargets / textures have the lit planes in them un-obstructed from then camera. Well, the lit planes in the opaque texture can have more color than the transparent texture.

Using this technique we have mitigated the bright colours shining through the foreground darker coloured planes.

Conclusion

Hopefully me woffling on has been useful and highlighed a technique (order independent transparency) to overcome the transparency issue which is well documented in webgl and can cause quite alot of visual issues. Any questions or issues let me know more than happy to try and help. Until next time.