Some thing which took me a while to understand was that there isn’t a linear correlation between vertex runs and fragment runs. Enter the scene - interpolation.

Background

The two key shaders which define how we process data on the GPU are vertex and fragment shaders. In other platforms we can use geometry or compute shaders each with various purposes.

Uniforms, varyings and attributes

Uniforms are how we pass data from the CPU side in our client side threejs to the GPU side. There are some primitives but not limitted too:

- number (float/int)

- arrays (fixed size == fixed memory)

1// i.e.2uniform vec3 colors[10]3// an array of vec3's of size ten or ten slots4// for data in the format vec3 or can be any other5// basic type..

- textures (2d/3d)

- vector3 / vector4

The most important part of using these is to understand the hardware limitations. I.e. if the max size of an array allowed with your GPU then plan within this with a comfortable margin.

Varyings are like conectors between the vertex and fragment shaders.

1// vertex shader2varying vec3 color;34void main () {56 gl_Position = projectionMatrix * modelViewMatrix * vec4(position, 1.0);78 // Red9 color = vec3(1.0, 0.0, 0.0);10}1112// fragment shader13varying vec3 color;1415void main () {16 gl_FragColor = vec4(color, 1.0);17}

This is a linear process vertex —> fragment.

Finally attributes are per vertex, so you need to define a attribute of the same type for every vertex. So if 4 verticies then an attribute would have 4 data points.

1// Key things here are a BufferAttribute MUST have a typed array and2// the channeles or components the data has must match to the 2nd parameter3// i.e.4// Single instance5const data = new Vector3(1, 3, 9);6// 2 instances each with 3 channels7const dataArray = [8 1,3,9,9 1,3,910]1112// This set attribute shows 3 channels per data point13geometry.setAttribute('offset', new BufferAttribute(new Float32Array(offsetArray), 3))

1// vertex shader2attribute vec3 offset;34void main () {5 gl_Position = projectionMatrix * modelViewMatrix * vec4(position + offset, 1.0)6}

Vertex shader

A vertex shader is a program or unit of code which runs on the GPU for every vertex in a mesh. The vertex is defined by 3 primary channels or components and then a secondary channel/ component.

1// i.e.2vec3 vertexPosition = vec3(0.2,0.5,0.1);3// or..4vec4(position, 1.0);

The three primary channels are x/y/z and the 4th used in the vertex shader is the perspective divide. To help with the conversion between coordinate systems (to eventually end with screen coordinates).

This is why the more vertices you have the more preassure you put on the GPU as the more of these programs run in parrallel.

So what is the correlation between vertex runs and fragment runs?

Fragment shaders

Fragment shaders are what determines the color of the meshes material. So if you set:

1gl_FragColor = vec4(1.0, 0.0, 0.0, 1.0);

You would have a material which is completely red in threejs. This fragment code runs for every fragment of the mesh, so if you want to vary the color across the material you have to modulate the color with a unique piece of data like:

1gl_FragColor = vec4(vUv.x, 0.0, 0.0, 1.0);

So with this information now in mind we can see that we will get a gradient of red from left to right as the uv.x is different for every fragment.

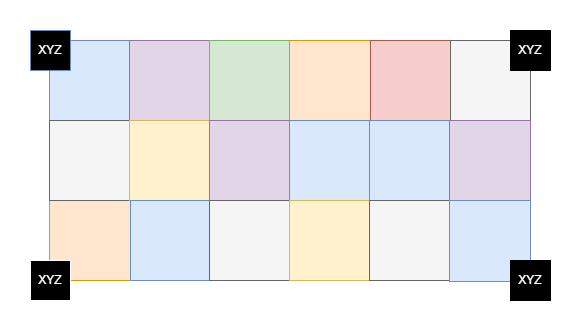

Vertex shaders do not have a 1 to 1 relationship with fragment runs. If you have 4 vertices and like a 6x3 grid of pixels you would have 4 vertex shader runs and 18 fragment shader runs.

Remember the key thing here is that the code you write in the shaders is a generic, if you want to make it specific per fragment, then either use per fragment pieces of data like uv’s or take advantage of linear interpolation between the two types of shaders.

The diagram above shows the 4 verticies and the 18 pixels.

The way to think about the data going from vertex to fragment shader is to think that for the fragments between the vertexes i.e. the pixels each one will be a mix of the data points.

1vec3 mixedColor = mix(vec3(1.0, 0.0, 0.0), vec3(0.0, 1.0, 0.0), 0.5);2// Half way through so half of the first param to mix3// and half of the second to mix.45vec3 mixedColor = vec3(0.5, 0.5, 0.0, 1.0);

The above is called linear interpolation but between the vertex and fragment shader is a little bit more complicated with a different name, but the principle is the same.

So fragments get a mixed value of data points varyings, when they are passed from the vertex shader to the fragment shader.