I wanted to see if it was possible to use depth data to get world coordinates in a post processing (shader pass) fragment shader.

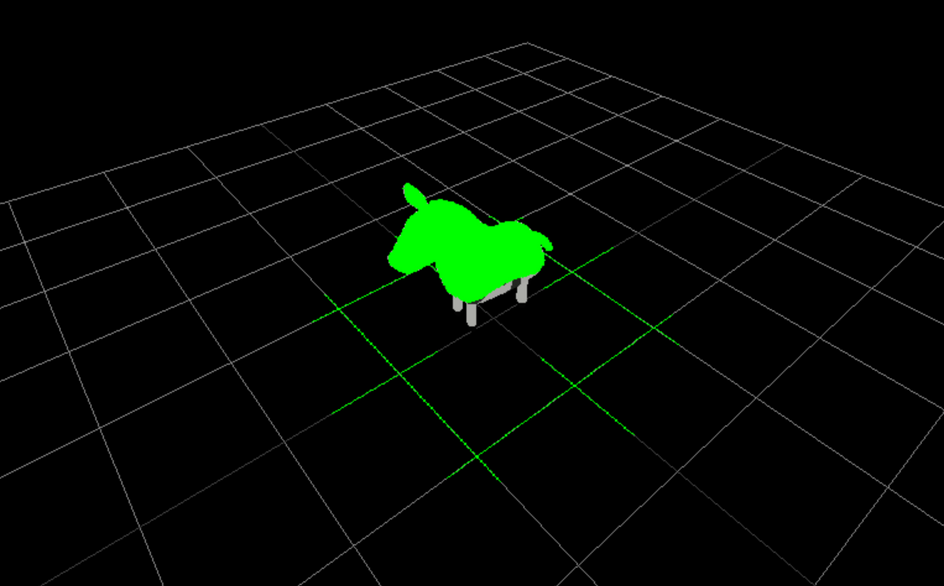

Here is the end result:

Here is the main file:

1import React, { useRef, useEffect } from 'react';2import { useThree, useFrame, extend } from '@react-three/fiber';3import { EffectComposer } from 'three/examples/jsm/postprocessing/EffectComposer';4import { ShaderPass } from 'three/examples/jsm/postprocessing/ShaderPass';5import { RenderPass } from 'three/examples/jsm/postprocessing/RenderPass';6import * as THREE from 'three';789extend({ EffectComposer, ShaderPass, RenderPass })1011const shaderPass ={12 uniforms: {13 time: { value: 0 },14 tDiffuse: { value: null },15 depthTexture: { value: null},16 projectionMatrixInverse: {value: null},17 viewMatrixInverse: {value: null},18 },19 vertexShader: `20 varying vec2 vUv;21 void main () {22 vUv = uv;23 gl_Position = projectionMatrix * modelViewMatrix * vec4(position, 1.0);24 }25 `,26 fragmentShader: `27 uniform float time;28 uniform sampler2D tDiffuse;29 uniform sampler2D depthTexture;30 varying vec2 vUv;3132 uniform mat4 projectionMatrixInverse;33 uniform mat4 viewMatrixInverse;343536 vec3 worldCoordinatesFromDepth(float depth) {37 float z = depth * 2.0 - 1.0;3839 vec4 clipSpaceCoordinate = vec4(vUv * 2.0 - 1.0, z, 1.0);40 vec4 viewSpaceCoordinate = projectionMatrixInverse * clipSpaceCoordinate;4142 viewSpaceCoordinate /= viewSpaceCoordinate.w;4344 vec4 worldSpaceCoordinates = viewMatrixInverse * viewSpaceCoordinate;4546 return worldSpaceCoordinates.xyz;47 }4849 float sphereSDF(vec3 p, float radius) {50 return length(p) - radius;51 }5253 void main() {54 float depth = texture( depthTexture, vUv ).x;55 vec3 worldPosition = worldCoordinatesFromDepth(depth);56 float radius = mod(0.1 * time * 10.0, 3.0);5758 if (sphereSDF(worldPosition, radius) < 0.0 && sphereSDF(worldPosition, radius) > -1.0) {59 gl_FragColor = vec4(0.0,1.0,0.0,1.0);60 } else {61 vec3 sceneColor = texture(tDiffuse, vUv).xyz;62 gl_FragColor = vec4(sceneColor, 1.0);63 }64 }65 `66}6768const Effects = () => {69 const composer = useRef();70 const ref = useRef()71 const { gl, size, scene, camera } = useThree();7273 const [ target ] = React.useMemo(() => {74 const target = new THREE.WebGLRenderTarget(75 window.innerWidth,76 window.innerHeight,77 {78 minFilter: THREE.LinearFilter,79 magFilter: THREE.LinearFilter,80 format: THREE.RGBFormat,81 stencilBuffer: false,82 depthBuffer: true,83 depthTexture: new THREE.DepthTexture()84 },85 );86 return [ target ];87 }, []);888990 useEffect(() => {91 composer.current.setSize(size.width, size.height)92 }, [size])9394 useFrame((state) => {95 state.gl.setRenderTarget(target);96 state.gl.render(scene, camera);9798 if (ref.current) {99 ref.current.uniforms['depthTexture'].value =target.depthTexture;100 ref.current.uniforms['projectionMatrixInverse'].value = camera.projectionMatrixInverse;101 ref.current.uniforms['viewMatrixInverse'].value = camera.matrixWorld;102 ref.current.uniforms['time'].value = state.clock.elapsedTime;103 }104 composer.current.render()105 }, 1);106107 return (108 <effectComposer ref={composer} args={[gl]}>109 <renderPass attachArray="passes" scene={scene} camera={camera} />110 <shaderPass attachArray="passes" ref={ref} args={[shaderPass]} needsSwap={false} renderToScreen />111 </effectComposer>112 )113}114115export default Effects;

Firstly we need depth data! After some google’ing you can obtain a depth texture from a render target.

We setup a render target like so:

1const [ target ] = React.useMemo(() => {2 const target = new THREE.WebGLRenderTarget(3 window.innerWidth,4 window.innerHeight,5 {6 minFilter: THREE.LinearFilter,7 magFilter: THREE.LinearFilter,8 format: THREE.RGBFormat,9 stencilBuffer: false,10 depthBuffer: true,11 depthTexture: new THREE.DepthTexture()12 },13 );14 return [ target ];15}, []);

There are a few options you must set on the render target and most importantly set depthBuffer to true and pass in a depthTexture as seen above.

You access the state on useFrame and you can do this with it:

1useFrame((state) => {2 state.gl.setRenderTarget(target);3 state.gl.render(scene, camera);45 //.....6})

We are using the render target and rendering the scene to it. A render target is a place where we can render to, a common thing to use render targets for is off screen rendering. But in this case we are only interested in grabbing the depth texture which the render target has on it as a property

Once we have done this, you can grab the depth texture simply by doing:

1const depthTexture = renderTarget.depthTexture

This is just a texture, you can’t really access the data outside of a shader, well you can’t do this easily in the context of R3F/three.js.

Once we have this you set it as a uniform:

1ref.current.uniforms['depthTexture'].value = target.depthTexture;

and basically you can do this in the fragment shader:

1uniform sampler2D depthTexture;2varying vec2 vUv;34void main() {5 float depth = texture(depthTexture, vUv).x67 gl_FragColor = vec4(depth, 1.0);8}

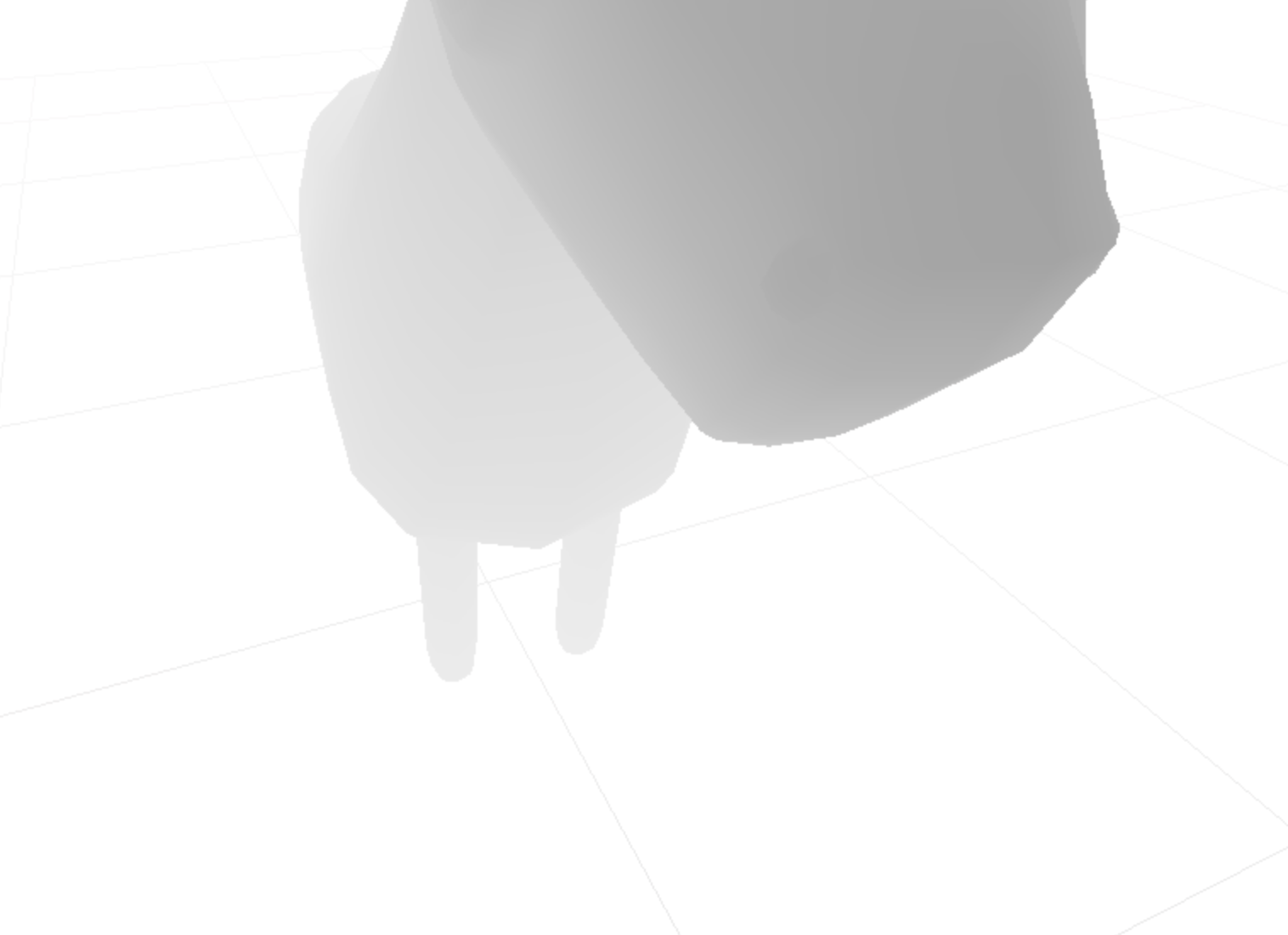

If you did this and outputted the depth to the color, the greater the depth or z value, the whiter the color should be.

Here’s an example:

A small note - I’m fairly sure the depth value is stored in the first component of the vector that we get from reading the depth texture. Ie .r or .x. As I have played around with this and tried it with .y or .g and didn’t get any results. Feel free to correct me if this is the wrong interpretation! 🙂

World coordinates in the fragment shader

We now have a depth or z value now so how can we use this in a shader with GLSL?

Here’s the website for understanding coordinate systems. Before we take a deep dive.

Well here’s the general idea:

- get clip space coordinates

- get viewSpace coordinates

- Finally calculate world space

Here’s the function we use to get world coordinates:

1vec3 worldCoordinatesFromDepth(float depth) {2 float z = depth * 2.0 - 1.0;34 vec4 clipSpaceCoordinate = vec4(vUv * 2.0 - 1.0, z, 1.0);5 vec4 viewSpaceCoordinate = projectionMatrixInverse * clipSpaceCoordinate;67 viewSpaceCoordinate /= viewSpaceCoordinate.w;89 vec4 worldSpaceCoordinates = viewMatrixInverse * viewSpaceCoordinate;1011 return worldSpaceCoordinates.xyz;12}

vUv and z values are in the range [0,1] and is a screen coordinate, convert this to clip space coordinate range [-1.0, 1.0]. Do this by doing * 2.0 - 1.0. I’ll let you do some examples in your head but it does work 😀

To then go from clip space to view space, we multiple by the inverse projection matrix.

We have to undo the perspective divide that automatically occurs at the end of the vertex shader run. We do this by multiplying instead of dividing and inverting the w component:

1viewSpaceCoordinate = viewSpaceCoordinate * 1 /viewSpaceCoordinate.w23or/45viewSpaceCoordinate /= viewSpaceCoordinate.w

Finally we multiply the viewSpaceCoordinate by the inverse viewMatrix.

And there we go! world space coordinates!!

A very useful github issue which explains matrices in terms of three.js and GLSL is here. This will give you a clear idea of whats what and also which matrices you need the inverse of…

Expanding color across scene

All we finally do is use this world coordinate for every pixel of the screen and expand an sdf by expanding the radius with time:

1float radius = mod(0.1 * time * 10.0, 3.0);23if (sphereSDF(worldPosition, radius) < 0.0 && sphereSDF(worldPosition, radius) > -1.0) {4 gl_FragColor = vec4(0.0,1.0,0.0,1.0);5} else {6 vec3 sceneColor = texture(tDiffuse, vUv).xyz;7 gl_FragColor = vec4(sceneColor, 1.0);8}

The trick here to get a repeating expansion of color every 3 units, so we use the built in mod function (modulus).

Final Thoughts

This was an experiment to play around with depth textures and to better understand coordinate systems.

We have covered render targets, depth textures and converting between coordinate systems in GLSL.

Things which you could do is if you have orbs with bloom and you wanted a wave of color to hit these orbs and not the rest of the scene, you could render the orbs to both the main scene and render target scene. Then use the depth data with a couple of conditionals from the render target and this way you can target specific things in a scene and do effects in 3D space.

This is scratching the surface with what you can do with this! But a nice intro into depth data and how to use 😎

Until next time!