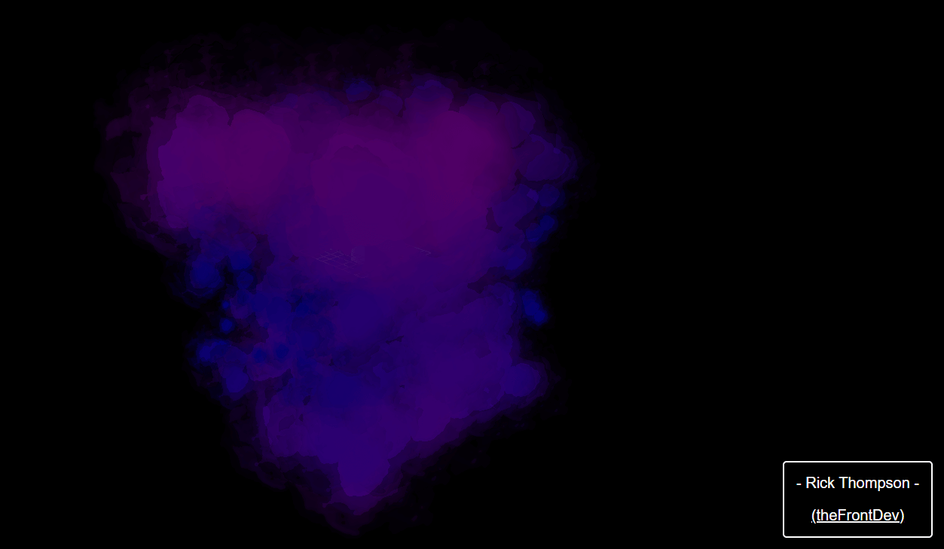

Below is the code and example from codesandbox:

The premise is to spread planes in an area and make the planes lookat the camera (done automatically with points in Three). Then using a shaderMaterial modify the scale / gl_PointSize and colors of the planes uisng pre calculated pseudo normals.

Remember, when we use normals in light calculations it basically creates colours based on how the light interacts with the surface at this normal position. This means we get different colours based on the normals that we pre computed and passed to this shader.

Below is the main component for the shader.

1const tex = useLoader(THREE.TextureLoader, "/test-3.3.png");2tex.encoding = THREE.LinearEncoding;3const orb = useLoader(THREE.TextureLoader, "/Smoke15Frames.png");4const pointsRef = useRef();5const shaderRef = useRef();67const shader = {8 uniforms: {9 positions: {10 value: tex,11 },12 orb: {13 value: orb,14 },15 time: {16 value: 0,17 },18 },19// shaders.....20};2122let [positions, normals] = useMemo(() => {23 const positions = [];24 const normals = [];2526 for (let i = 0; i < 400; i++) {27 const x = Math.random() * 30 - 15;28 const y = Math.random() * 30 - 15;29 const z = Math.random() * 30 - 15;30 positions.push(x, y, z);3132 const normal = new THREE.Vector3(x, y, z).normalize();33 normals.push(normal.x, normal.y, normal.z);34 }3536 return [new Float32Array(positions), new Float32Array(normals)];37}, []);3839useFrame(({ clock }) => {40 if (shaderRef.current) {41 shaderRef.current.uniforms.time.value = clock.elapsedTime * 0.35;42 }43});4445return (46 <group>47 <OrbitControls />4849 <points ref={pointsRef}>50 <bufferGeometry attach="geometry">51 <bufferAttribute52 attach="attributes-position"53 array={positions}54 count={positions.length / 3}55 itemSize={3}56 />57 <bufferAttribute58 attach="attributes-calNormal"59 array={normals}60 count={normals.length / 3.0}61 itemSize={3}62 />63 </bufferGeometry>64 <shaderMaterial65 attach="material"66 ref={shaderRef}67 vertexShader={shader.vertexShader}68 fragmentShader={shader.fragmentShader}69 uniforms={shader.uniforms}70 transparent={true}71 alphaToCoverage72 sizeAttenuation={false}73 depthTest={true}74 depthWrite={true}75 />76 </points>77 </group>78);

So this is all pretty standard stuff.

We load some textures and apply to the planes to give the feel of transparency and fluffyness. This is done by loading the textures, and then passing them through a uniform to the shader and once in the shader we sample and output the alpha and mixed color.

Some key settings are as follows:

1transparent={true}2alphaToCoverage3sizeAttenuation={false}4depthTest={true}5depthWrite={true}

AlphaToCoverage is a technique described as:

Enables alpha to coverage. Can only be used with MSAA-enabled contexts (meaning when the renderer was created with antialias parameter set to true). Default is false.

This some what mitigates the transparency issue where bright colours shine through more darker ones.

We define a points object with custom attributes and then use a custom shaderMaterial, baring in mind that any attributes defined like this will have to use a typed array, as we have done in the useMemo function above

1<points ref={pointsRef}>2 <bufferGeometry attach="geometry">3 <bufferAttribute4 attach="attributes-position"5 array={positions}6 count={positions.length / 3}7 itemSize={3}8 />9 <bufferAttribute10 attach="attributes-calNormal"11 array={normals}12 count={normals.length / 3.0}13 itemSize={3}14 />15 </bufferGeometry>16 <shaderMaterial17 attach="material"18 ref={shaderRef}19 vertexShader={shader.vertexShader}20 fragmentShader={shader.fragmentShader}21 uniforms={shader.uniforms}22 transparent={true}23 alphaToCoverage24 sizeAttenuation={false}25 depthTest={true}26 depthWrite={true}27 />28</points>

We can then define attributes like:

1attribute vec3 calNormal;

Vertex Shader

The vertex shader is where we oscillate and scale up / down the planes over time, to give the pseudo effect of a moving volume / very fake basic dynamics. This is a neat way of mimicing volumes from a far. Doesnt necessarily look too good the closer up in 3D space you go.

1uniform sampler2D positions;2uniform float time;3varying vec2 vUv;4varying vec3 vNormal;5varying float vDepth;6varying vec3 vPosition;7varying float vNoise;8attribute float index;9attribute vec3 calNormal;1011${noise}1213void main () {1415 float noise = Perlin3D(position * 0.041 + time);1617 gl_PointSize = mix(160.0, 100.0 * 5.0, noise);1819 vec4 worldPosition = modelMatrix * vec4(position, 1.0);20 vec4 cameraSpacePosition = viewMatrix * worldPosition;21 float distance = length(cameraSpacePosition.xyz);22 vDepth = distance;2324 float adjustedPointSize = gl_PointSize * 120.0 / distance; // Adjust this factor as needed25 gl_PointSize = adjustedPointSize;2627 gl_Position = projectionMatrix * modelViewMatrix * vec4(position, 1.0) ;28 vNormal = calNormal;29 vDepth = gl_Position.z / gl_Position.w;30 vPosition = position;31 vNoise = noise;32}

The point size or scale of the gl_PointSize is determined and modified such that it is quite big and it changes over time with a continuous noise function:

1float noise = Perlin3D(position * 0.041 + time);23gl_PointSize = mix(160.0, 100.0 * 5.0, noise);

(The time causes a new noise value every frame)

We slightly modify the gl_PointSize the closer it gets to the camera.

Then pass a fair few varying between shaders to be used in the fragment shader.

Fragment Shader

The fragment shader is where we utilize the normals and create colors for the planes:

1uniform sampler2D orb;2uniform float time;3varying vec2 vUv;4varying vec3 vNormal;5varying float vDepth;6varying vec3 vPosition;7varying float vNoise;8910${noise}1112void main () {1314 float x = 256.0 / 1280.0;15 float y = 256.0 / 768.0;1617 float total = 1280.0 / 256.0;1819 float col = mod(1.0, total);20 float row = floor(1.0 / total);2122 // Finally to UV texture coordinates23 vec2 uv = vec2(24 x * gl_PointCoord.x + col * x,25 1.0 - y - row * y + y * gl_PointCoord.y26 );2728 float alpha = texture2D(orb, uv).a;2930 if (alpha < 0.01) discard;3132 vec3 lightPosition = vec3(0.0,10.0,0.0);3334 vec3 lightDir = normalize(lightPosition - vPosition.xyz);3536 float distance = length(lightPosition - vPosition.xyz) * 2.030;37 float attenuation = 1.0 / (1.0 + 0.1 * distance + 0.01 * distance * distance);3839 float intensity = dot(vNormal, lightDir);4041 vec3 finalColor = vec3(intensity) * attenuation;4243 // gl_FragColor = vec4( color.rgb, alpha);44 gl_FragColor = vec4(mix( finalColor + vec3(.20,0.,0.47),finalColor + vec3(.710,0.,0.47) , vNoise),alpha);45}

The orb texture we sample from, we use some made up uvs:

1float x = 256.0 / 1280.0;2float y = 256.0 / 768.0;34float total = 1280.0 / 256.0;56float col = mod(1.0, total);7float row = floor(1.0 / total);89// Finally to UV texture coordinates10vec2 uv = vec2(11 x * gl_PointCoord.x + col * x,12 1.0 - y - row * y + y * gl_PointCoord.y13);1415float alpha = texture2D(orb, uv).a;

This is basically me playing around with it till I get something I like visually.

The light calculations is how a light source defined by the source position, interacts with this matertial:

1vec3 lightPosition = vec3(0.0,10.0,0.0);23vec3 lightDir = normalize(lightPosition - vPosition.xyz);45float distance = length(lightPosition - vPosition.xyz) * 2.030;6float attenuation = 1.0 / (1.0 + 0.1 * distance + 0.01 * distance * distance);78float intensity = dot(vNormal, lightDir);910vec3 finalColor = vec3(intensity) * attenuation;

Again this is me doing some basic light calculations all with pseudo values etc, just what looks good for me.

And then the final output color is:

1gl_FragColor = vec4(mix( finalColor + vec3(.20,0.,0.47),finalColor + vec3(.710,0.,0.47) , vNoise),alpha);

This is straight forward, we mix the final colour in both cases with an arbitary colour so we can get pulsating planes with differing colours, with a noise value for randomness and then using the alpha value we got earlier.