Recently I wanted to implement a particle system in react-three-fiber. After some extensive reading around, I turned to GPU particles. One critical thing I wanted to understand is how to do this in a react three fiber setting! There are some amazing articles but not in R3F…

Let’s start from the beginning.

If we try to generate a large number of particles in a normal R3F project, without using shaders it will very quickly become apparent that the FPS drops. This is because in javascript you have one thread, everything happens in sequence, with the render loop being responsible for this.

A perfect use case for GPUs is highly parallelised commutations. So imagine instead of looping through 262,144 particles objects in the CPU side in javascript and updating positions, rotations and colours every frame… multiple times a second, we move all these calculations to a shader which runs on the GPU.

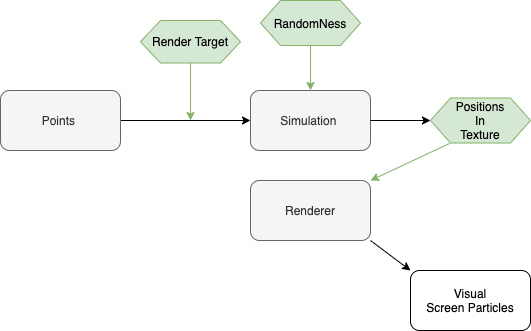

Here is a diagram I created to explain the general approach:

The reason I quoted 262,144 particles is because this is 512*512. This would be the texture you could use to generate particles. Heres the general gist of what’s going to happen:

we create a simple sphere

we then sample the surface of this sphere

generate a dataTexture with initial positions

create a simulation shader

create a render shader which will render what we see

pass a render target between both shaders to update positions

End code:

1import React, { useRef, useMemo, useEffect } from "react";2import * as THREE from "three";3import { extend, useFrame, useThree } from "@react-three/fiber";4import { OrbitControls } from "three/examples/jsm/controls/OrbitControls";5import { MeshSurfaceSampler } from "three/examples/jsm/math/MeshSurfaceSampler";67extend({ OrbitControls, MeshSurfaceSampler });89function sampleMesh(width, height, size, sphere) {10 var len = width * height * 4;11 var data = new Float32Array(len);12 const sampler = new MeshSurfaceSampler(sphere).build();13 for (let i = 0; i < len; i += 4) {14 const tempPosition = new THREE.Vector3();15 sampler.sample(tempPosition);16 data[i] = tempPosition.x;17 data[i + 1] = tempPosition.y;18 data[i + 2] = tempPosition.z;19 data[i + 3] = Math.random() * 10.0;20 }21 return data;22}2324const simulationVertexShader = `25varying vec2 vUv;26void main() {27 vUv = uv;28 gl_Position = projectionMatrix * modelViewMatrix * vec4( position, 1.0 );29}30`;31const simulationFragmentShader = `32uniform sampler2D positions;//DATA Texture containing original positions33varying vec2 vUv;34uniform float uTime;3536vec4 permute(vec4 x){return mod(((x*34.0)+1.0)*x, 289.0);}37vec4 taylorInvSqrt(vec4 r){return 1.79284291400159 - 0.85373472095314 * r;}38vec3 fade(vec3 t) {return t*t*t*(t*(t*6.0-15.0)+10.0);}3940float cnoise(vec3 P){41 vec3 Pi0 = floor(P); // Integer part for indexing42 vec3 Pi1 = Pi0 + vec3(1.0); // Integer part + 143 Pi0 = mod(Pi0, 289.0);44 Pi1 = mod(Pi1, 289.0);45 vec3 Pf0 = fract(P); // Fractional part for interpolation46 vec3 Pf1 = Pf0 - vec3(1.0); // Fractional part - 1.047 vec4 ix = vec4(Pi0.x, Pi1.x, Pi0.x, Pi1.x);48 vec4 iy = vec4(Pi0.yy, Pi1.yy);49 vec4 iz0 = Pi0.zzzz;50 vec4 iz1 = Pi1.zzzz;5152 vec4 ixy = permute(permute(ix) + iy);53 vec4 ixy0 = permute(ixy + iz0);54 vec4 ixy1 = permute(ixy + iz1);5556 vec4 gx0 = ixy0 / 7.0;57 vec4 gy0 = fract(floor(gx0) / 7.0) - 0.5;58 gx0 = fract(gx0);59 vec4 gz0 = vec4(0.5) - abs(gx0) - abs(gy0);60 vec4 sz0 = step(gz0, vec4(0.0));61 gx0 -= sz0 * (step(0.0, gx0) - 0.5);62 gy0 -= sz0 * (step(0.0, gy0) - 0.5);6364 vec4 gx1 = ixy1 / 7.0;65 vec4 gy1 = fract(floor(gx1) / 7.0) - 0.5;66 gx1 = fract(gx1);67 vec4 gz1 = vec4(0.5) - abs(gx1) - abs(gy1);68 vec4 sz1 = step(gz1, vec4(0.0));69 gx1 -= sz1 * (step(0.0, gx1) - 0.5);70 gy1 -= sz1 * (step(0.0, gy1) - 0.5);7172 vec3 g000 = vec3(gx0.x,gy0.x,gz0.x);73 vec3 g100 = vec3(gx0.y,gy0.y,gz0.y);74 vec3 g010 = vec3(gx0.z,gy0.z,gz0.z);75 vec3 g110 = vec3(gx0.w,gy0.w,gz0.w);76 vec3 g001 = vec3(gx1.x,gy1.x,gz1.x);77 vec3 g101 = vec3(gx1.y,gy1.y,gz1.y);78 vec3 g011 = vec3(gx1.z,gy1.z,gz1.z);79 vec3 g111 = vec3(gx1.w,gy1.w,gz1.w);8081 vec4 norm0 = taylorInvSqrt(vec4(dot(g000, g000), dot(g010, g010), dot(g100, g100), dot(g110, g110)));82 g000 *= norm0.x;83 g010 *= norm0.y;84 g100 *= norm0.z;85 g110 *= norm0.w;86 vec4 norm1 = taylorInvSqrt(vec4(dot(g001, g001), dot(g011, g011), dot(g101, g101), dot(g111, g111)));87 g001 *= norm1.x;88 g011 *= norm1.y;89 g101 *= norm1.z;90 g111 *= norm1.w;9192 float n000 = dot(g000, Pf0);93 float n100 = dot(g100, vec3(Pf1.x, Pf0.yz));94 float n010 = dot(g010, vec3(Pf0.x, Pf1.y, Pf0.z));95 float n110 = dot(g110, vec3(Pf1.xy, Pf0.z));96 float n001 = dot(g001, vec3(Pf0.xy, Pf1.z));97 float n101 = dot(g101, vec3(Pf1.x, Pf0.y, Pf1.z));98 float n011 = dot(g011, vec3(Pf0.x, Pf1.yz));99 float n111 = dot(g111, Pf1);100101 vec3 fade_xyz = fade(Pf0);102 vec4 n_z = mix(vec4(n000, n100, n010, n110), vec4(n001, n101, n011, n111), fade_xyz.z);103 vec2 n_yz = mix(n_z.xy, n_z.zw, fade_xyz.y);104 float n_xyz = mix(n_yz.x, n_yz.y, fade_xyz.x);105 return 2.2 * n_xyz;106}107108const vec4 magic = vec4(1111.1111, 3141.5926, 2718.2818, 0);109110void main() {111 vec3 pos = texture2D( positions, vUv ).rgb;112113 // Some initial random numbers being114 // generated115 vec2 tc = vUv * magic.xy;116 vec3 skewed_seed = vec3(0.7856 * magic.z + tc.y - tc.x) + magic.yzw;117118 // Generating noise using these random119 // initial numbers. And generating a new120 //noise every frame using uTime.121 vec3 velocity;122 velocity.x = cnoise(vec3(tc.x, tc.y, skewed_seed.x) + uTime);123 velocity.y = cnoise(vec3(tc.y, skewed_seed.y, tc.x) + uTime);124 velocity.z = cnoise(vec3(skewed_seed.z, tc.x, tc.y) + uTime);125126 // divide the veolcity by 10.0 to slow it // down alot.127 velocity = normalize(velocity) / 10.0;128129130 gl_FragColor = vec4( pos + velocity,1.0 );131}132`;133134const renderVertexShader = `135uniform sampler2D positions;//RenderTarget containing the transformed positions136uniform float pointSize;137uniform float uTime;138139vec4 permute(vec4 x){return mod(((x*34.0)+1.0)*x, 289.0);}140vec4 taylorInvSqrt(vec4 r){return 1.79284291400159 - 0.85373472095314 * r;}141vec3 fade(vec3 t) {return t*t*t*(t*(t*6.0-15.0)+10.0);}142143float cnoise(vec3 P){144 vec3 Pi0 = floor(P); // Integer part for indexing145 vec3 Pi1 = Pi0 + vec3(1.0); // Integer part + 1146 Pi0 = mod(Pi0, 289.0);147 Pi1 = mod(Pi1, 289.0);148 vec3 Pf0 = fract(P); // Fractional part for interpolation149 vec3 Pf1 = Pf0 - vec3(1.0); // Fractional part - 1.0150 vec4 ix = vec4(Pi0.x, Pi1.x, Pi0.x, Pi1.x);151 vec4 iy = vec4(Pi0.yy, Pi1.yy);152 vec4 iz0 = Pi0.zzzz;153 vec4 iz1 = Pi1.zzzz;154155 vec4 ixy = permute(permute(ix) + iy);156 vec4 ixy0 = permute(ixy + iz0);157 vec4 ixy1 = permute(ixy + iz1);158159 vec4 gx0 = ixy0 / 7.0;160 vec4 gy0 = fract(floor(gx0) / 7.0) - 0.5;161 gx0 = fract(gx0);162 vec4 gz0 = vec4(0.5) - abs(gx0) - abs(gy0);163 vec4 sz0 = step(gz0, vec4(0.0));164 gx0 -= sz0 * (step(0.0, gx0) - 0.5);165 gy0 -= sz0 * (step(0.0, gy0) - 0.5);166167 vec4 gx1 = ixy1 / 7.0;168 vec4 gy1 = fract(floor(gx1) / 7.0) - 0.5;169 gx1 = fract(gx1);170 vec4 gz1 = vec4(0.5) - abs(gx1) - abs(gy1);171 vec4 sz1 = step(gz1, vec4(0.0));172 gx1 -= sz1 * (step(0.0, gx1) - 0.5);173 gy1 -= sz1 * (step(0.0, gy1) - 0.5);174175 vec3 g000 = vec3(gx0.x,gy0.x,gz0.x);176 vec3 g100 = vec3(gx0.y,gy0.y,gz0.y);177 vec3 g010 = vec3(gx0.z,gy0.z,gz0.z);178 vec3 g110 = vec3(gx0.w,gy0.w,gz0.w);179 vec3 g001 = vec3(gx1.x,gy1.x,gz1.x);180 vec3 g101 = vec3(gx1.y,gy1.y,gz1.y);181 vec3 g011 = vec3(gx1.z,gy1.z,gz1.z);182 vec3 g111 = vec3(gx1.w,gy1.w,gz1.w);183184 vec4 norm0 = taylorInvSqrt(vec4(dot(g000, g000), dot(g010, g010), dot(g100, g100), dot(g110, g110)));185 g000 *= norm0.x;186 g010 *= norm0.y;187 g100 *= norm0.z;188 g110 *= norm0.w;189 vec4 norm1 = taylorInvSqrt(vec4(dot(g001, g001), dot(g011, g011), dot(g101, g101), dot(g111, g111)));190 g001 *= norm1.x;191 g011 *= norm1.y;192 g101 *= norm1.z;193 g111 *= norm1.w;194195 float n000 = dot(g000, Pf0);196 float n100 = dot(g100, vec3(Pf1.x, Pf0.yz));197 float n010 = dot(g010, vec3(Pf0.x, Pf1.y, Pf0.z));198 float n110 = dot(g110, vec3(Pf1.xy, Pf0.z));199 float n001 = dot(g001, vec3(Pf0.xy, Pf1.z));200 float n101 = dot(g101, vec3(Pf1.x, Pf0.y, Pf1.z));201 float n011 = dot(g011, vec3(Pf0.x, Pf1.yz));202 float n111 = dot(g111, Pf1);203204 vec3 fade_xyz = fade(Pf0);205 vec4 n_z = mix(vec4(n000, n100, n010, n110), vec4(n001, n101, n011, n111), fade_xyz.z);206 vec2 n_yz = mix(n_z.xy, n_z.zw, fade_xyz.y);207 float n_xyz = mix(n_yz.x, n_yz.y, fade_xyz.x);208 return 2.2 * n_xyz;209}210211void main() {212 vec4 pos = texture2D( positions, position.xy ).xyzw;213214 gl_Position = projectionMatrix * modelViewMatrix * vec4( pos.xyz, 1.0 );215216 float noiseV = cnoise(pos.xyz + uTime);217218 gl_PointSize = pointSize * noiseV;219}220`;221222const renderFragmentShader = `223uniform sampler2D textureCircle;224void main() {225 vec4 customTextureColor = texture2D( textureCircle, gl_PointCoord );226227 if (customTextureColor.a < 0.5) discard;228229 gl_FragColor = vec4( vec3(0.1, 0.1, 0.1), 0.13 );230}231`;232233const Particles = () => {234 const {235 scene,236 gl,237 camera,238 gl: { domElement },239 } = useThree();240241 const renderRef = useRef();242 //width / height of the FBO243 var width = 512;244 var height = 512;245246 const sphereGeom = new THREE.SphereBufferGeometry(2);247 const material = new THREE.MeshStandardMaterial();248 const sphere = new THREE.Mesh(sphereGeom, material);249250 var data = sampleMesh(width, height, 256, sphere);251252 const positions = useMemo(253 () =>254 new THREE.DataTexture(255 data,256 width,257 height,258 THREE.RGBAFormat,259 THREE.FloatType260 ),261 [data, height, width]262 );263264 useEffect(() => {265 positions.magFilter = THREE.NearestFilter;266 positions.minFilter = THREE.NearestFilter;267 positions.needsUpdate = true;268 }, [positions]);269270 const textureLoader = new THREE.TextureLoader();271 const particleTexture = "/circle.png";272273 var simulationUniforms = {274 positions: { value: positions },275 uTime: { value: 0 },276 };277278 var renderUniforms = {279 positions: { value: null },280 pointSize: { value: 6.0 },281 camPos: { value: camera.position },282 textureCircle: {283 value: textureLoader.load(particleTexture),284 },285 };286287 const [renderTarget] = React.useMemo(() => {288 const target = new THREE.WebGLRenderTarget(289 window.innerWidth,290 window.innerHeight,291 {292 minFilter: THREE.NearestFilter,293 magFilter: THREE.NearestFilter,294 format: THREE.RGBAFormat,295 stencilBuffer: false,296297 type: THREE.FloatType,298 }299 );300 return [target];301 }, []);302303 const orthoCamera = new THREE.OrthographicCamera(304 -1,305 1,306 1,307 -1,308 1 / Math.pow(2, 53),309 1310 );311312 const sceneRtt = new THREE.Scene();313314 const [geometry] = React.useMemo(() => {315 const geometry = new THREE.BufferGeometry();316317 geometry.setAttribute(318 "position",319 new THREE.BufferAttribute(320 new Float32Array([321 -1,322 -1,323 0,324 1,325 -1,326 0,327 1,328 1,329 0,330 -1,331 -1,332 0,333 1,334 1,335 0,336 -1,337 1,338 0,339 ]),340 3341 )342 );343 geometry.setAttribute(344 "uv",345 new THREE.BufferAttribute(346 new Float32Array([0, 1, 1, 1, 1, 0, 0, 1, 1, 0, 0, 0]),347 2348 )349 );350351 return [geometry];352 }, []);353354 const shaderMaterial = new THREE.ShaderMaterial({355 vertexShader: simulationVertexShader,356 fragmentShader: simulationFragmentShader,357 uniforms: simulationUniforms,358 });359360 sceneRtt.add(new THREE.Mesh(geometry, shaderMaterial));361 sceneRtt.add(orthoCamera);362363 useFrame((state) => {364 state.gl.setRenderTarget(renderTarget);365 state.gl.render(sceneRtt, orthoCamera);366 renderRef.current.uniforms.positions.value = renderTarget.texture;367 state.gl.setRenderTarget(null);368 state.gl.render(scene, camera);369370 shaderMaterial.uniforms.uTime.value = state.clock.getElapsedTime();371 renderRef.current.uniforms.camPos.value = camera.position;372 });373374 return (375 <>376 <color attach="background" args={["black"]} />377 <orbitControls args={[camera, domElement]} />378 <points>379 <bufferGeometry attach="geometry">380 <bufferAttribute381 attachObject={["attributes", "position"]}382 count={data.length / 4}383 itemSize={4}384 array={data}385 />386 </bufferGeometry>387 <shaderMaterial388 attach="material"389 ref={renderRef}390 vertexShader={renderVertexShader}391 fragmentShader={renderFragmentShader}392 uniforms={renderUniforms}393 blending={THREE.MultiplyBlending}394 />395 </points>396 </>397 );398};399400export default Particles;

So we create a simple sphere:

1const sphereGeom = new THREE.SphereBufferGeometry(2);23const material = new THREE.MeshStandardMaterial();45const sphere = new THREE.Mesh(sphereGeom, material);

For our initial positions we will randomly sample the sphere 262,144 times. We can do this like so:

1var data = sampleMesh(width, height, 256, sphere);23function sampleMesh(width, height, size, sphere) {4 var len = width * height * 4;5 var data = new Float32Array(len);6 const sampler = new MeshSurfaceSampler(sphere).build();7 for (let i = 0; i < len; i += 4) {8 const tempPosition = new THREE.Vector3();9 sampler.sample(tempPosition);10 data[i] = tempPosition.x;11 data[i + 1] = tempPosition.y;12 data[i + 2] = tempPosition.z;13 data[i + 3] = Math.random() * 10.0;14 }15 return data;16}

We can create a dataTexture like so:

1const positions = useMemo(2 () =>3 new THREE.DataTexture(4 data,5 width,6 height,7 THREE.RGBAFormat,8 THREE.FloatType9 ),10 [data, height, width]11);1213useEffect(() => {14 positions.magFilter = THREE.NearestFilter;15 positions.minFilter = THREE.NearestFilter;16 positions.needsUpdate = true;17}, [positions]);

SimulationShader:

This Initial positions dataTexture then gets passed to the simulation shader uniforms as positions:

1var simulationUniforms = {2 positions: { value: positions },3 uTime: { value: 0 },4};

The simulation shader is where we move the particles.

The simulation is done in a separate scene using the old style of Three.js classes. We set a mesh to be a quad and add a custom shaderMaterial.

1const orthoCamera = new THREE.OrthographicCamera(2 -1,3 1,4 1,5 -1,6 1 / Math.pow(2, 53),7 18);910const sceneRtt = new THREE.Scene();1112const [geometry] = React.useMemo(() => {13 const geometry = new THREE.BufferGeometry();1415 geometry.setAttribute(16 "position",17 new THREE.BufferAttribute(18 new Float32Array([19 -1,20 -1,21 0,22 1,23 -1,24 0,25 1,26 1,27 0,28 -1,29 -1,30 0,31 1,32 1,33 0,34 -1,35 1,36 0,37 ]),38 339 )40 );41 geometry.setAttribute(42 "uv",43 new THREE.BufferAttribute(44 new Float32Array([0, 1, 1, 1, 1, 0, 0, 1, 1, 0, 0, 0]),45 246 )47 );4849 return [geometry];50}, []);5152const shaderMaterial = new THREE.ShaderMaterial({53 vertexShader: simulationVertexShader,54 fragmentShader: simulationFragmentShader,55 uniforms: simulationUniforms,56});5758sceneRtt.add(new THREE.Mesh(geometry, shaderMaterial));59sceneRtt.add(orthoCamera);

We do this so that we can; attach a render target, then render this scene, then swap out the render target and render the particle renderer, which is defined in R3F way. We do all this in the useFrame:

1useFrame((state) => {2 state.gl.setRenderTarget(renderTarget);3 state.gl.render(sceneRtt, orthoCamera);4 renderRef.current.uniforms.positions.value = renderTarget.texture;5 state.gl.setRenderTarget(null);6 state.gl.render(scene, camera);78 shaderMaterial.uniforms.uTime.value = state.clock.getElapsedTime();9});

The render target needs to use THREE.RGBAType and THREE.FloatType. RGBA as we want to store 4 floats in the render target texture R, G, B and Alpha corresponding to [x, y, z, gl_PointSize]. The texture generated from this render target will be passed between the simulation and render shaders. It will store the updated positions and gl_PointSize.

One way to think about it is we have divided a texture into 262,144 squares or parts, each part or square on this texture represents a particle and will have a uniformly colour or RGBA floats, which represents the position and gl_PointSize.

For example a simple way to think of this is if we have the initial position texture of 8x8, then we will have 64 squares that the screen would be divided into, each pixel or fragment in one of these squares would have the same position or colour data being passed to the render target and consecutive squares would have uniformly same different RGBA values . And each squares’ pixels would move together as one particle. This is probably not the right way to think about it but it cleared everything up in my mind!

In the simulation shader we grab the initial position from the dataTexture and create a random velocity and direction vector using some noise and then we add this to the initial position in gl_FragColor. When this shader now runs because we are using a render target all these colour squares or RGBA squares will be saved to this texture and now we can pass to the render shader. Storing positional info in a texture.

1void main() {2 vec3 pos = texture2D( positions, vUv ).rgb;34 // Some initial random numbers being5 // generated6 vec2 tc = vUv * magic.xy;7 vec3 skewed_seed = vec3(0.7856 * magic.z + tc.y - tc.x) + magic.yzw;89 // Generating noise using these random10 // initial numbers. And generating a new11 //noise every frame using uTime.12 vec3 velocity;13 velocity.x = cnoise(vec3(tc.x, tc.y, skewed_seed.x) + uTime);14 velocity.y = cnoise(vec3(tc.y, skewed_seed.y, tc.x) + uTime);15 velocity.z = cnoise(vec3(skewed_seed.z, tc.x, tc.y) + uTime);1617 // divide the veolcity by 10.0 to slow it // down alot.18 velocity = normalize(velocity) / 10.0;192021 gl_FragColor = vec4( pos + velocity,1.0 );22}

RenderShader:

The render Shader has a vertex shader where we grab the position from the renderTexture and then use this to set the position of one on the THREE.Points point. We also apply some noise to the gl_PointSize.

1void main() {2 vec4 pos = texture2D( positions, position.xy ).xyzw;34 gl_Position = projectionMatrix * modelViewMatrix * vec4( pos.xyz, 1.0 );56 float noiseV = cnoise(pos.xyz + uTime);78 gl_PointSize = pointSize * noiseV;9}

The render fragment shader used a custom circle texture with everything else in the image transparent. We can then do a simple check in the shader to discard any fragments which have a low opacity:

1uniform sampler2D textureCircle;2void main() {3 vec4 customTextureColor = texture2D( textureCircle, gl_PointCoord );45 if (customTextureColor.a < 0.5) discard;67 gl_FragColor = vec4( vec3(0.1, 0.1, 0.1), 0.13 );8}

Why do this? So we can have a custom shape for each of the points, you could have any solid shape with a transparent background and use the same approach.

Final Thoughts:

I hope you have found this useful or at least gave you some ideas to play around with GPU particles. If you like this find me on linkedIn and give me a thumbs up :)

You could easily add another geometry or mesh to sample from and transition between different shapes or add user interaction using the xy coordinates of the mouse.